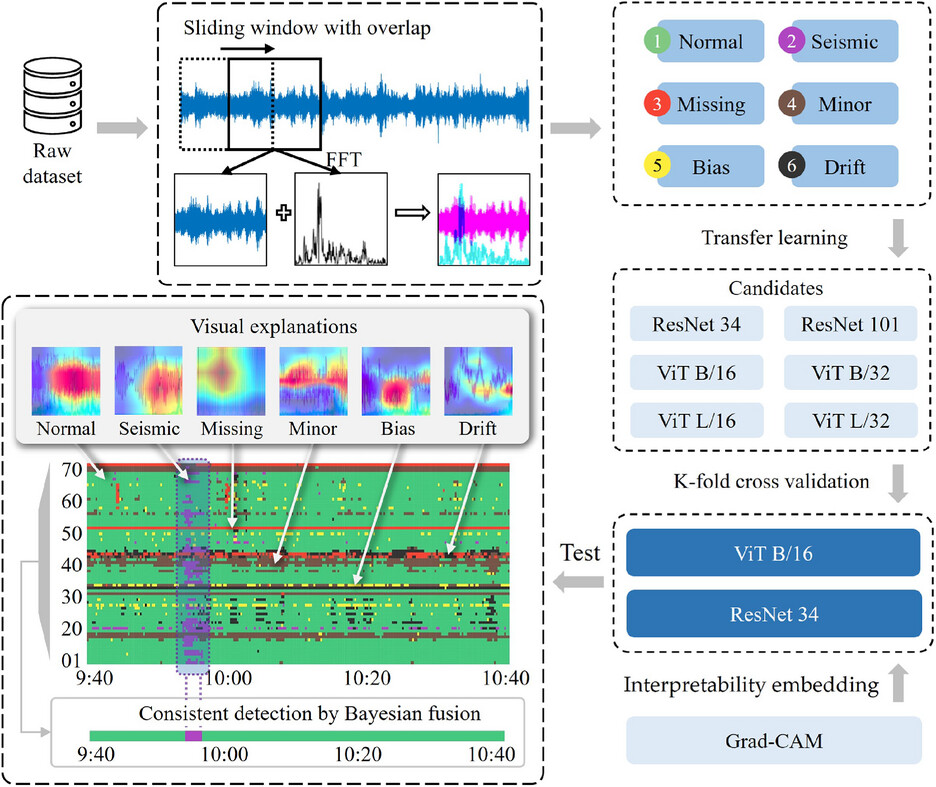

Abstract: Structural health monitoring (SHM) aims to assess civil infrastructures’ performance and ensure safety. Automated detection of in situ events of interest, such as earthquakes, from extensive continuous monitoring data, is important to ensure the timeliness of subsequent data analysis. To overcome the poor timeliness of manual identification and the inconsistency of sensors, this paper proposes an automated seismic event detection procedure with interpretability and robustness. The sensor-wise raw time series is transformed into image data, enhancing the separability of classification while endowing with visual understandability. Vision Transformers (ViTs) and Residual Networks (ResNets) aided by a heat map–based visual interpretation technique are used for image classification. Multitype faulty data that could disturb the seismic event detection are considered in the classification. Then, divergent results from multiple sensors are fused by Bayesian fusion, outputting a consistent seismic detection result. A real-world monitoring data set of four seismic responses of a pair of long-span bridges is used for method validation. At the classification stage, ResNet 34 achieved the best accuracy of over 90% with minimal training cost. After Bayesian fusion, globally consistent and accurate seismic detection results can be obtained using a ResNet or ViT. The proposed approach effectively localizes seismic events within multisource, multifault monitoring data, achieving automated and consistent seismic event detection.